The (Not So) Hidden Sexism Behind Your Favorite Chatbot

The Fall of Google Search: Part 3 - Results Quality

The Fall of Google Search: Part 3 - Results Quality

Alexandra Lustig

Alexandra Lustig

Alexandra Lustig

Nov 30, 2023

Nov 30, 2023

Nov 30, 2023

Buckle up, guys. This is a long one.

Chances are you’ve used a digital voice assistant to help you with things like reminders, grocery lists, alarms, and simple internet queries. In 2022, there were around 142 million users of voice assistants in the United States.

But…have you ever noticed that the chatbots you’ve come across or heard about in the last decade have female or feminine-sounding names and voices?

Siri, Alexa, Amy, Debbie, Marie, Cortana… all of these chatbots or “digital assistants” from companies like Apple, Amazon, HSBC, Deutsche Bank, ING Bank, and Microsoft, respectively, have employed feminine names for their products.

Even fictional chatbots like Samantha from the movie Her are assigned the female gender.

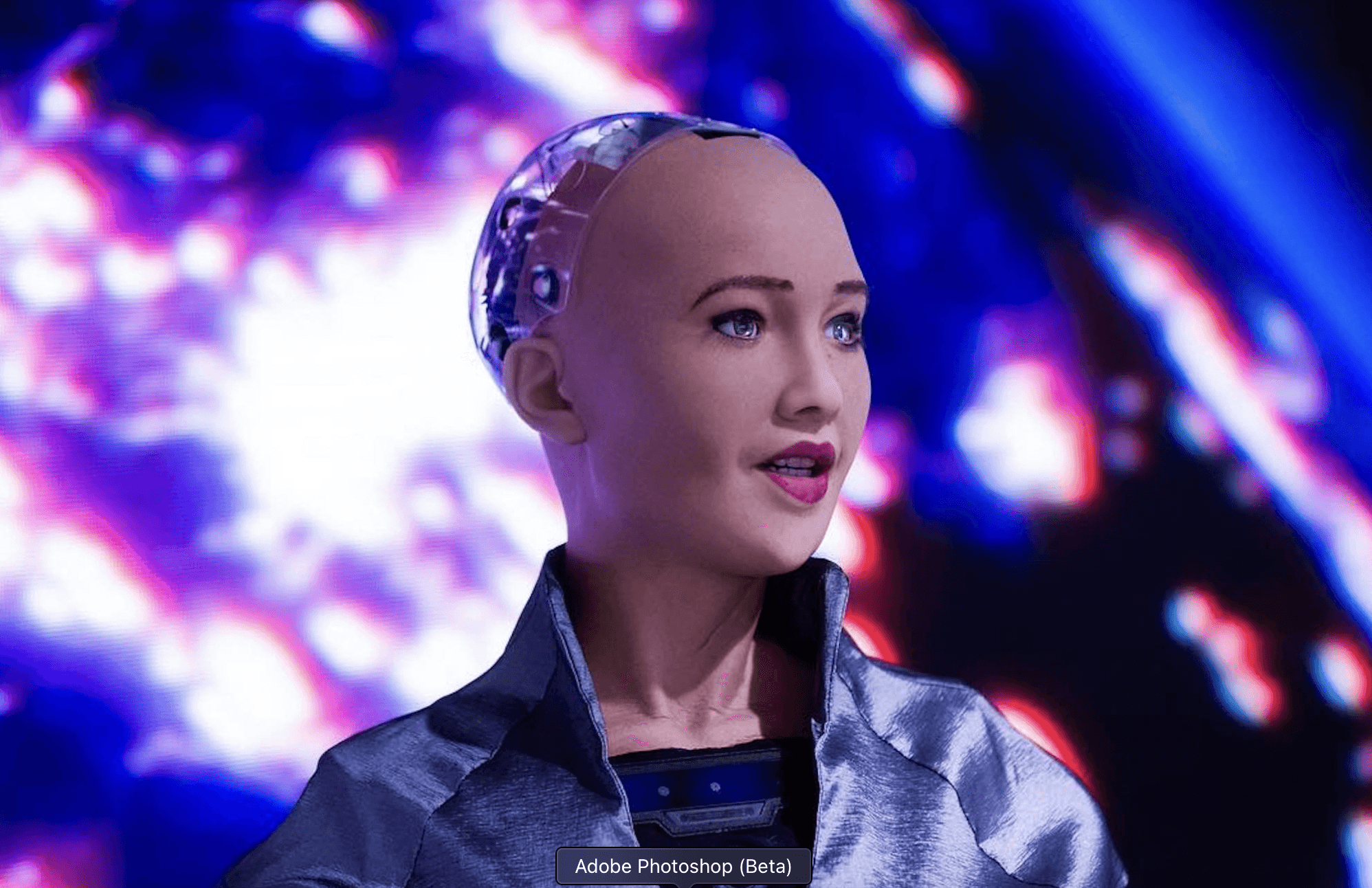

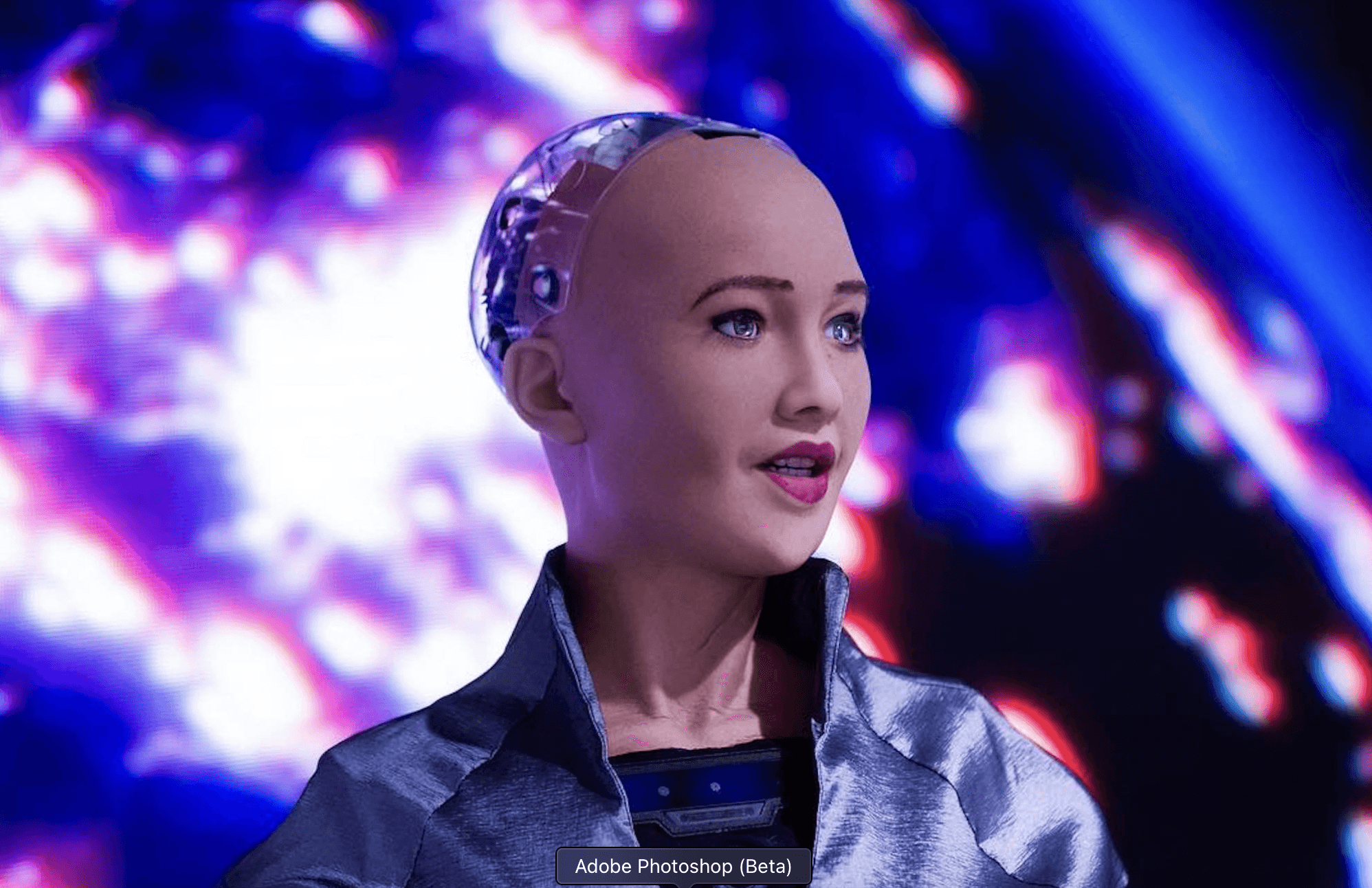

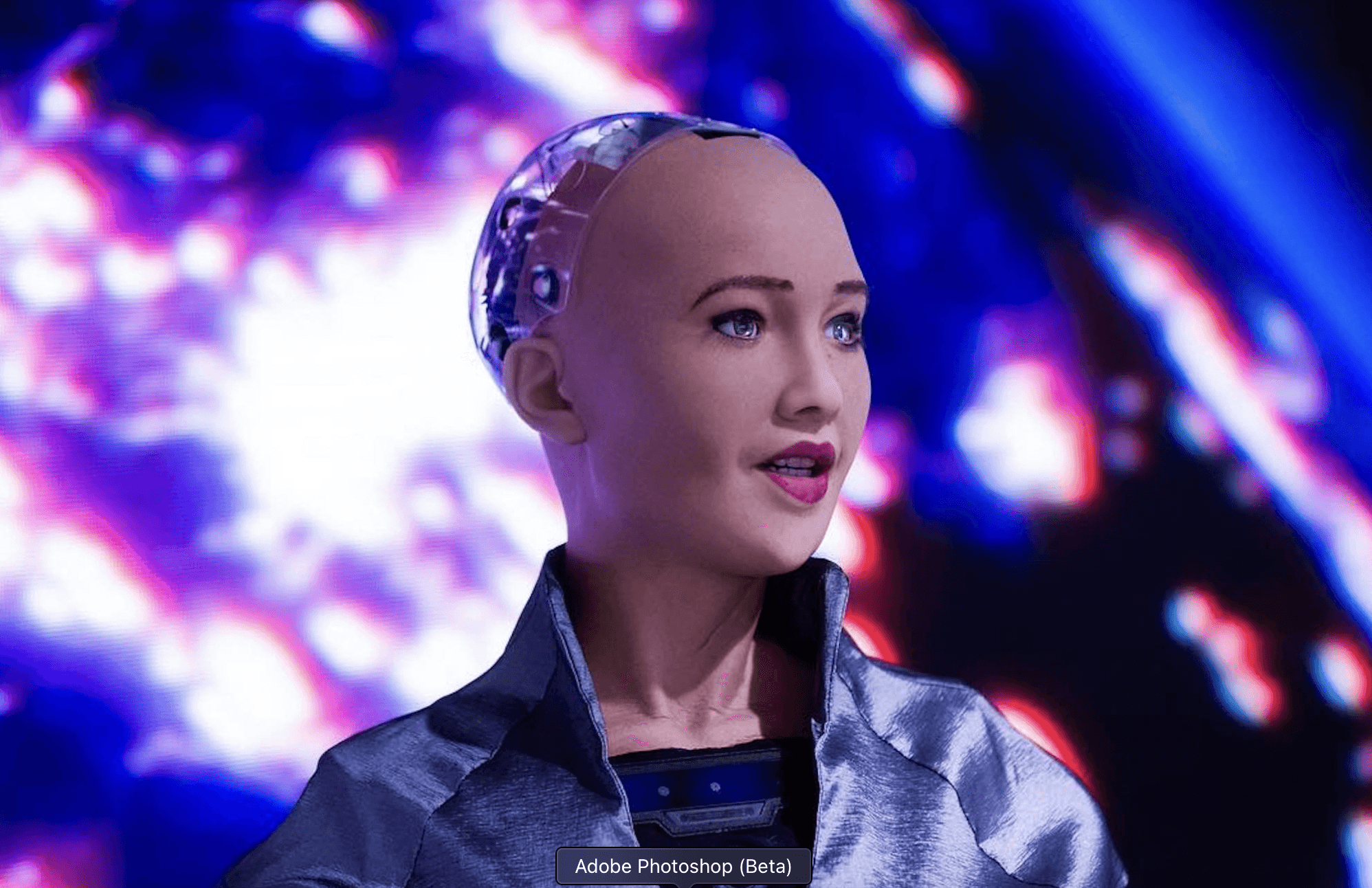

And of course, who could forget about Sophia?

Now, not only do they have female names, but they are almost always set to have a female voice as the default. Researchers at the Institute of Information Systems and Marketing (IISM) have found that “gender-specific cues are commonly used in the design of chatbots and that most chatbots are – explicitly or implicitly – designed to convey a specific gender. More specifically, most of the chatbots have female names, female-looking avatars, and are described as female chatbots.”

But, why do tech companies continually inject femininity into their AI and platforms? Where did that idea even come from? And why should we care?

According to tech companies, research shows that people “respond more positively to women’s voices,” and since the product designers need to reach the largest number of customers, the idea is that to do so, they should use a woman’s voice for their designs. But why do people like women’s voices? And why would a woman’s voice work so effectively for a digital assistant?

In a 2011 paper by Karl MacDorman, an associate professor at Indiana University’s School of Informatics and Computing who specializes in human-computer interaction, it was reported that both women and men said female voices came across as warmer. In practice, women even “showed a subconscious preference for responding to females; men remained subconsciously neutral.”

According to a study in The Psychology of Marketing, “people prefer female bots because they are perceived as more human than male bots.” When they use the term “human,” what they’re referring to is the fact that “warmth and experience (but not competence) are seen as fundamental qualities to be a full human.” So, to be warm is to be human, and to be human is to show warmth. And what are machines fundamentally lacking? Warmth.

As Jessi Hempel explains in Wired, “People tend to perceive female voices as helping us solve our problems by ourselves. We want our technology to help us, but we want to be the bosses of it, so we are more likely to opt for a female interface.”

So basically, machines are cold and impersonal; women are warm and helpful. This highlights the ethical quandary faced by AI designers and policymakers: Women are said to be transformed into objects in AI, but injecting women's humanity into AI objects makes these objects seem more human and acceptable.

Tech companies need people to interact with and use their machines, consistently and constantly, in order to make money. By making their digital assistants “female,” they’re leveraging long-held gender biases for their own profit: Since people respond positively to female voices, making the machine a woman helps humans transfer the positive qualities they associate with women onto the machines, and thus makes people use the machines more.

But let’s go a step deeper: why do we all think women are warm and helpful? Why is that association so implicit in our brains that companies can instinctively take advantage of it for profit?

Going back to childhood, the first authority figures we interact with are largely female. Inside and outside the home, mothers, teachers, nannies, and other female caretakers are the people we spend most of our time with and learning from as children.

“Mothers are the foundation of our society,” says Motherly CEO and Founder Jill Koziol, and are typically (and stereotypically) the ones who run the household and care for their children. Once those kids attend school, 97.5% of their preschool and kindergarten teachers are women. Between home and school, they’re still cared for by women: 94% of childcare workers are female. And when they need other services? Their nurses, medical assistants, and hairdressers are all made up of 90% women.

These women are the people we interact with the most in all aspects of our lives, teaching us, instructing us, helping us, and empowering us to learn and grow on our own. We, as a society, have pretty much always associated women with being warm, nurturing, and helpful, and our stereotypes are prescriptive of those ideas as well.

But if we take a step back, these ideas of “helpfulness” being associated with women and female jobs, actually became much more ubiquitous back when women entered the workforce in hordes in the 1950s.

After World War II, many women had to enter the labor force out of necessity. And with the increased paperwork of the Industrial Revolution, the job of secretary became popular, and soon about 1.7 million women were “stenographers, typists or secretaries.” These women often had to work twice as hard to help their boss look good while they got paid significantly less. According to The National Archives, there were tons of strict rules for secretaries to follow in order to accomplish this, both professionally and personally.

Here are some real examples from a Secretarial Training Program in Texas, 1959:

“Smile readily and naturally.”

“Be fastidious about your appearance.”

“Go out of your way to help others.”

“Have a pleasing and well-modulated voice.”

“Be (usually) cheerful.”

“Refrain from showing off how much you know.”

“Be a good listener.”

These strict guidelines were upheld so well that they formed the real-life stereotypes for the office secretary or assistant that still exist today. Those specific qualities are what engendered the idea that women are more helpful, responsive, and amiable than men.

We want our assistants to help us solve problems, but we want to take all the credit for it.

This draws a straight line to how we define our modern-day assistants, now AI-powered, virtual, and multi-platform. The way we think about what tasks are “suitable” for men vs. women aligns perfectly with our established misogynistic expectations. Here’s the thing, it’s not just that we as a society are conditioned to view digital assistants as being stereotypical, subservient female entities, but those products themselves are, in many cases, actually promoting gender stereotypes. Journalist Leah Fessler tested virtual voice assistants’ responses to sexual harassment back in 2017 and found multiple issues. When told “You're hot", Amazon’s Alexa replied, "That's nice of you to say". To the remark, "You're a slut", Microsoft’s Cortana delivered a web search result with an article entitled: "30 signs you're a slut".

In 2020, researchers from the Brookings Institution re-tested these interactions and found that they had improved somewhat. The voice assistants were more likely to push back against abuse than before, but not always very clearly.

So what can we do to fix this problem? Can we just switch the voices of our digital assistants to “male” and be done? Well, no. Firstly, changing the gender of an interface is not as simple as switching the voice track. The late Stanford communications professor Clifford Nass, who coauthored Wired for Speech, explained that men and women tend to use different words. For example, women’s speech includes more personal pronouns (I, you, she), while men’s uses more quantifiers (one, two, some more).

Changing the voice’s gender also changes how users interact with the product. If someone listening to a voice interface hears a male using feminine phrasing, they are apparently likely to be distracted and distrustful. (Here’s another opportunity to delve into why people might feel distrustful of a feminine-sounding male voice… but that’s for another time.)

We’re still left with a wider problem, even if we change the voice’s pitch and tweak its vocab. There is still a serious lack of diversity in our AI products and their development, extending beyond gender identity.

In an interview with Kelsey Snell, Black Women in A.I. Founder Angle Bush talks about some of the real-life consequences of the lack of diversity in AI development, including the effects in law enforcement, mortgage and loan approvals, and more.

One example she offers is of a young man in Detroit who was wrongly arrested based on AI facial recognition software identifying him as a suspect. “Facial-recognition systems are more likely either to misidentify or fail to identify African Americans than other races, errors that could result in innocent citizens being marked as suspects in crimes,” according to the Atlantic. The lack of clean, current, and diverse data, built and developed by teams of diverse engineers and designers, only serves to maintain our current systems of unconscious biases.

This lack of diversity in AI and technology development extends to many other facets of our daily lives and will continue to expand its reach in the coming years. Anyone with darker skin can attest to the fact that when they try to use an automatic soap dispenser in a bathroom, they need to wave their hands multiple times or use a lighter napkin to trigger the sensor. That is because the dispenser used near-infrared technology to reflect light from the skin to trigger the sensor. Darker skin tones absorb more light, so enough light isn't reflected back to the sensor to activate the soap dispenser.

It seems like a funny design flaw, but it again demonstrates the major diversity issue in tech. It may be unintentional to exclude people with darker skin tones from having clean hands, but it’s because a company full of white people forgot to test their product on dark skin, and it’s not just them.

The less diversity we have in tech, the more major design flaws we will see that disproportionately affect people of color.

So, can tech be racist or sexist?

Yeah, definitely.

Remember when Microsoft created “Tay,” an innocent AI bot that was programmed to engage different audiences in fun, friendly conversation on Twitter? In less than a day, Tay went from claiming “Humans are cool” to “Hitler was right I hate the Jews.” When it comes to chatbots, there is one company that has worked to create a “genderless voice assistant,” aptly named GenderLess Voice. Their product is called Q and its mission is to “break the mindset, where female voice is generally preferred for assistive tasks and male voice for commanding tasks.” According to their site, Q was a product of close collaboration between Copenhagen Pride, Virtue, Equal AI, Koalition Interactive & thirtysoundsgood.

While gender is not the only bias we need to eradicate in tech, this type of assistant is a great step forward. It’s one way to eliminate those stereotypes and biases that stem from more than 70 years ago and yet are unfortunately still espoused by many today.

We create technology. Programmers, engineers, designers, etc. develop tech with their biases in mind- you can’t avoid it. But, those biases can increase bigotry, hatred, and acts of violence. If we don’t take specific and purposeful steps towards creating companies and technology that foster diversity - in all of its forms - as well as emotional intelligence, we will never be able to progress as a fully tech-integrated society with truly helpful and ubiquitous technology.

FWIW, I hope to see Q adopted by big tech and more gender-inclusive, diverse, and empathetic technology developed in the future.

This article was originally published on a Squarespace domain on 11/30/23. Comments from that domain have been lost.

Buckle up, guys. This is a long one.

Chances are you’ve used a digital voice assistant to help you with things like reminders, grocery lists, alarms, and simple internet queries. In 2022, there were around 142 million users of voice assistants in the United States.

But…have you ever noticed that the chatbots you’ve come across or heard about in the last decade have female or feminine-sounding names and voices?

Siri, Alexa, Amy, Debbie, Marie, Cortana… all of these chatbots or “digital assistants” from companies like Apple, Amazon, HSBC, Deutsche Bank, ING Bank, and Microsoft, respectively, have employed feminine names for their products.

Even fictional chatbots like Samantha from the movie Her are assigned the female gender.

And of course, who could forget about Sophia?

Now, not only do they have female names, but they are almost always set to have a female voice as the default. Researchers at the Institute of Information Systems and Marketing (IISM) have found that “gender-specific cues are commonly used in the design of chatbots and that most chatbots are – explicitly or implicitly – designed to convey a specific gender. More specifically, most of the chatbots have female names, female-looking avatars, and are described as female chatbots.”

But, why do tech companies continually inject femininity into their AI and platforms? Where did that idea even come from? And why should we care?

According to tech companies, research shows that people “respond more positively to women’s voices,” and since the product designers need to reach the largest number of customers, the idea is that to do so, they should use a woman’s voice for their designs. But why do people like women’s voices? And why would a woman’s voice work so effectively for a digital assistant?

In a 2011 paper by Karl MacDorman, an associate professor at Indiana University’s School of Informatics and Computing who specializes in human-computer interaction, it was reported that both women and men said female voices came across as warmer. In practice, women even “showed a subconscious preference for responding to females; men remained subconsciously neutral.”

According to a study in The Psychology of Marketing, “people prefer female bots because they are perceived as more human than male bots.” When they use the term “human,” what they’re referring to is the fact that “warmth and experience (but not competence) are seen as fundamental qualities to be a full human.” So, to be warm is to be human, and to be human is to show warmth. And what are machines fundamentally lacking? Warmth.

As Jessi Hempel explains in Wired, “People tend to perceive female voices as helping us solve our problems by ourselves. We want our technology to help us, but we want to be the bosses of it, so we are more likely to opt for a female interface.”

So basically, machines are cold and impersonal; women are warm and helpful. This highlights the ethical quandary faced by AI designers and policymakers: Women are said to be transformed into objects in AI, but injecting women's humanity into AI objects makes these objects seem more human and acceptable.

Tech companies need people to interact with and use their machines, consistently and constantly, in order to make money. By making their digital assistants “female,” they’re leveraging long-held gender biases for their own profit: Since people respond positively to female voices, making the machine a woman helps humans transfer the positive qualities they associate with women onto the machines, and thus makes people use the machines more.

But let’s go a step deeper: why do we all think women are warm and helpful? Why is that association so implicit in our brains that companies can instinctively take advantage of it for profit?

Going back to childhood, the first authority figures we interact with are largely female. Inside and outside the home, mothers, teachers, nannies, and other female caretakers are the people we spend most of our time with and learning from as children.

“Mothers are the foundation of our society,” says Motherly CEO and Founder Jill Koziol, and are typically (and stereotypically) the ones who run the household and care for their children. Once those kids attend school, 97.5% of their preschool and kindergarten teachers are women. Between home and school, they’re still cared for by women: 94% of childcare workers are female. And when they need other services? Their nurses, medical assistants, and hairdressers are all made up of 90% women.

These women are the people we interact with the most in all aspects of our lives, teaching us, instructing us, helping us, and empowering us to learn and grow on our own. We, as a society, have pretty much always associated women with being warm, nurturing, and helpful, and our stereotypes are prescriptive of those ideas as well.

But if we take a step back, these ideas of “helpfulness” being associated with women and female jobs, actually became much more ubiquitous back when women entered the workforce in hordes in the 1950s.

After World War II, many women had to enter the labor force out of necessity. And with the increased paperwork of the Industrial Revolution, the job of secretary became popular, and soon about 1.7 million women were “stenographers, typists or secretaries.” These women often had to work twice as hard to help their boss look good while they got paid significantly less. According to The National Archives, there were tons of strict rules for secretaries to follow in order to accomplish this, both professionally and personally.

Here are some real examples from a Secretarial Training Program in Texas, 1959:

“Smile readily and naturally.”

“Be fastidious about your appearance.”

“Go out of your way to help others.”

“Have a pleasing and well-modulated voice.”

“Be (usually) cheerful.”

“Refrain from showing off how much you know.”

“Be a good listener.”

These strict guidelines were upheld so well that they formed the real-life stereotypes for the office secretary or assistant that still exist today. Those specific qualities are what engendered the idea that women are more helpful, responsive, and amiable than men.

We want our assistants to help us solve problems, but we want to take all the credit for it.

This draws a straight line to how we define our modern-day assistants, now AI-powered, virtual, and multi-platform. The way we think about what tasks are “suitable” for men vs. women aligns perfectly with our established misogynistic expectations. Here’s the thing, it’s not just that we as a society are conditioned to view digital assistants as being stereotypical, subservient female entities, but those products themselves are, in many cases, actually promoting gender stereotypes. Journalist Leah Fessler tested virtual voice assistants’ responses to sexual harassment back in 2017 and found multiple issues. When told “You're hot", Amazon’s Alexa replied, "That's nice of you to say". To the remark, "You're a slut", Microsoft’s Cortana delivered a web search result with an article entitled: "30 signs you're a slut".

In 2020, researchers from the Brookings Institution re-tested these interactions and found that they had improved somewhat. The voice assistants were more likely to push back against abuse than before, but not always very clearly.

So what can we do to fix this problem? Can we just switch the voices of our digital assistants to “male” and be done? Well, no. Firstly, changing the gender of an interface is not as simple as switching the voice track. The late Stanford communications professor Clifford Nass, who coauthored Wired for Speech, explained that men and women tend to use different words. For example, women’s speech includes more personal pronouns (I, you, she), while men’s uses more quantifiers (one, two, some more).

Changing the voice’s gender also changes how users interact with the product. If someone listening to a voice interface hears a male using feminine phrasing, they are apparently likely to be distracted and distrustful. (Here’s another opportunity to delve into why people might feel distrustful of a feminine-sounding male voice… but that’s for another time.)

We’re still left with a wider problem, even if we change the voice’s pitch and tweak its vocab. There is still a serious lack of diversity in our AI products and their development, extending beyond gender identity.

In an interview with Kelsey Snell, Black Women in A.I. Founder Angle Bush talks about some of the real-life consequences of the lack of diversity in AI development, including the effects in law enforcement, mortgage and loan approvals, and more.

One example she offers is of a young man in Detroit who was wrongly arrested based on AI facial recognition software identifying him as a suspect. “Facial-recognition systems are more likely either to misidentify or fail to identify African Americans than other races, errors that could result in innocent citizens being marked as suspects in crimes,” according to the Atlantic. The lack of clean, current, and diverse data, built and developed by teams of diverse engineers and designers, only serves to maintain our current systems of unconscious biases.

This lack of diversity in AI and technology development extends to many other facets of our daily lives and will continue to expand its reach in the coming years. Anyone with darker skin can attest to the fact that when they try to use an automatic soap dispenser in a bathroom, they need to wave their hands multiple times or use a lighter napkin to trigger the sensor. That is because the dispenser used near-infrared technology to reflect light from the skin to trigger the sensor. Darker skin tones absorb more light, so enough light isn't reflected back to the sensor to activate the soap dispenser.

It seems like a funny design flaw, but it again demonstrates the major diversity issue in tech. It may be unintentional to exclude people with darker skin tones from having clean hands, but it’s because a company full of white people forgot to test their product on dark skin, and it’s not just them.

The less diversity we have in tech, the more major design flaws we will see that disproportionately affect people of color.

So, can tech be racist or sexist?

Yeah, definitely.

Remember when Microsoft created “Tay,” an innocent AI bot that was programmed to engage different audiences in fun, friendly conversation on Twitter? In less than a day, Tay went from claiming “Humans are cool” to “Hitler was right I hate the Jews.” When it comes to chatbots, there is one company that has worked to create a “genderless voice assistant,” aptly named GenderLess Voice. Their product is called Q and its mission is to “break the mindset, where female voice is generally preferred for assistive tasks and male voice for commanding tasks.” According to their site, Q was a product of close collaboration between Copenhagen Pride, Virtue, Equal AI, Koalition Interactive & thirtysoundsgood.

While gender is not the only bias we need to eradicate in tech, this type of assistant is a great step forward. It’s one way to eliminate those stereotypes and biases that stem from more than 70 years ago and yet are unfortunately still espoused by many today.

We create technology. Programmers, engineers, designers, etc. develop tech with their biases in mind- you can’t avoid it. But, those biases can increase bigotry, hatred, and acts of violence. If we don’t take specific and purposeful steps towards creating companies and technology that foster diversity - in all of its forms - as well as emotional intelligence, we will never be able to progress as a fully tech-integrated society with truly helpful and ubiquitous technology.

FWIW, I hope to see Q adopted by big tech and more gender-inclusive, diverse, and empathetic technology developed in the future.

This article was originally published on a Squarespace domain on 11/30/23. Comments from that domain have been lost.

Buckle up, guys. This is a long one.

Chances are you’ve used a digital voice assistant to help you with things like reminders, grocery lists, alarms, and simple internet queries. In 2022, there were around 142 million users of voice assistants in the United States.

But…have you ever noticed that the chatbots you’ve come across or heard about in the last decade have female or feminine-sounding names and voices?

Siri, Alexa, Amy, Debbie, Marie, Cortana… all of these chatbots or “digital assistants” from companies like Apple, Amazon, HSBC, Deutsche Bank, ING Bank, and Microsoft, respectively, have employed feminine names for their products.

Even fictional chatbots like Samantha from the movie Her are assigned the female gender.

And of course, who could forget about Sophia?

Now, not only do they have female names, but they are almost always set to have a female voice as the default. Researchers at the Institute of Information Systems and Marketing (IISM) have found that “gender-specific cues are commonly used in the design of chatbots and that most chatbots are – explicitly or implicitly – designed to convey a specific gender. More specifically, most of the chatbots have female names, female-looking avatars, and are described as female chatbots.”

But, why do tech companies continually inject femininity into their AI and platforms? Where did that idea even come from? And why should we care?

According to tech companies, research shows that people “respond more positively to women’s voices,” and since the product designers need to reach the largest number of customers, the idea is that to do so, they should use a woman’s voice for their designs. But why do people like women’s voices? And why would a woman’s voice work so effectively for a digital assistant?

In a 2011 paper by Karl MacDorman, an associate professor at Indiana University’s School of Informatics and Computing who specializes in human-computer interaction, it was reported that both women and men said female voices came across as warmer. In practice, women even “showed a subconscious preference for responding to females; men remained subconsciously neutral.”

According to a study in The Psychology of Marketing, “people prefer female bots because they are perceived as more human than male bots.” When they use the term “human,” what they’re referring to is the fact that “warmth and experience (but not competence) are seen as fundamental qualities to be a full human.” So, to be warm is to be human, and to be human is to show warmth. And what are machines fundamentally lacking? Warmth.

As Jessi Hempel explains in Wired, “People tend to perceive female voices as helping us solve our problems by ourselves. We want our technology to help us, but we want to be the bosses of it, so we are more likely to opt for a female interface.”

So basically, machines are cold and impersonal; women are warm and helpful. This highlights the ethical quandary faced by AI designers and policymakers: Women are said to be transformed into objects in AI, but injecting women's humanity into AI objects makes these objects seem more human and acceptable.

Tech companies need people to interact with and use their machines, consistently and constantly, in order to make money. By making their digital assistants “female,” they’re leveraging long-held gender biases for their own profit: Since people respond positively to female voices, making the machine a woman helps humans transfer the positive qualities they associate with women onto the machines, and thus makes people use the machines more.

But let’s go a step deeper: why do we all think women are warm and helpful? Why is that association so implicit in our brains that companies can instinctively take advantage of it for profit?

Going back to childhood, the first authority figures we interact with are largely female. Inside and outside the home, mothers, teachers, nannies, and other female caretakers are the people we spend most of our time with and learning from as children.

“Mothers are the foundation of our society,” says Motherly CEO and Founder Jill Koziol, and are typically (and stereotypically) the ones who run the household and care for their children. Once those kids attend school, 97.5% of their preschool and kindergarten teachers are women. Between home and school, they’re still cared for by women: 94% of childcare workers are female. And when they need other services? Their nurses, medical assistants, and hairdressers are all made up of 90% women.

These women are the people we interact with the most in all aspects of our lives, teaching us, instructing us, helping us, and empowering us to learn and grow on our own. We, as a society, have pretty much always associated women with being warm, nurturing, and helpful, and our stereotypes are prescriptive of those ideas as well.

But if we take a step back, these ideas of “helpfulness” being associated with women and female jobs, actually became much more ubiquitous back when women entered the workforce in hordes in the 1950s.

After World War II, many women had to enter the labor force out of necessity. And with the increased paperwork of the Industrial Revolution, the job of secretary became popular, and soon about 1.7 million women were “stenographers, typists or secretaries.” These women often had to work twice as hard to help their boss look good while they got paid significantly less. According to The National Archives, there were tons of strict rules for secretaries to follow in order to accomplish this, both professionally and personally.

Here are some real examples from a Secretarial Training Program in Texas, 1959:

“Smile readily and naturally.”

“Be fastidious about your appearance.”

“Go out of your way to help others.”

“Have a pleasing and well-modulated voice.”

“Be (usually) cheerful.”

“Refrain from showing off how much you know.”

“Be a good listener.”

These strict guidelines were upheld so well that they formed the real-life stereotypes for the office secretary or assistant that still exist today. Those specific qualities are what engendered the idea that women are more helpful, responsive, and amiable than men.

We want our assistants to help us solve problems, but we want to take all the credit for it.

This draws a straight line to how we define our modern-day assistants, now AI-powered, virtual, and multi-platform. The way we think about what tasks are “suitable” for men vs. women aligns perfectly with our established misogynistic expectations. Here’s the thing, it’s not just that we as a society are conditioned to view digital assistants as being stereotypical, subservient female entities, but those products themselves are, in many cases, actually promoting gender stereotypes. Journalist Leah Fessler tested virtual voice assistants’ responses to sexual harassment back in 2017 and found multiple issues. When told “You're hot", Amazon’s Alexa replied, "That's nice of you to say". To the remark, "You're a slut", Microsoft’s Cortana delivered a web search result with an article entitled: "30 signs you're a slut".

In 2020, researchers from the Brookings Institution re-tested these interactions and found that they had improved somewhat. The voice assistants were more likely to push back against abuse than before, but not always very clearly.

So what can we do to fix this problem? Can we just switch the voices of our digital assistants to “male” and be done? Well, no. Firstly, changing the gender of an interface is not as simple as switching the voice track. The late Stanford communications professor Clifford Nass, who coauthored Wired for Speech, explained that men and women tend to use different words. For example, women’s speech includes more personal pronouns (I, you, she), while men’s uses more quantifiers (one, two, some more).

Changing the voice’s gender also changes how users interact with the product. If someone listening to a voice interface hears a male using feminine phrasing, they are apparently likely to be distracted and distrustful. (Here’s another opportunity to delve into why people might feel distrustful of a feminine-sounding male voice… but that’s for another time.)

We’re still left with a wider problem, even if we change the voice’s pitch and tweak its vocab. There is still a serious lack of diversity in our AI products and their development, extending beyond gender identity.

In an interview with Kelsey Snell, Black Women in A.I. Founder Angle Bush talks about some of the real-life consequences of the lack of diversity in AI development, including the effects in law enforcement, mortgage and loan approvals, and more.

One example she offers is of a young man in Detroit who was wrongly arrested based on AI facial recognition software identifying him as a suspect. “Facial-recognition systems are more likely either to misidentify or fail to identify African Americans than other races, errors that could result in innocent citizens being marked as suspects in crimes,” according to the Atlantic. The lack of clean, current, and diverse data, built and developed by teams of diverse engineers and designers, only serves to maintain our current systems of unconscious biases.

This lack of diversity in AI and technology development extends to many other facets of our daily lives and will continue to expand its reach in the coming years. Anyone with darker skin can attest to the fact that when they try to use an automatic soap dispenser in a bathroom, they need to wave their hands multiple times or use a lighter napkin to trigger the sensor. That is because the dispenser used near-infrared technology to reflect light from the skin to trigger the sensor. Darker skin tones absorb more light, so enough light isn't reflected back to the sensor to activate the soap dispenser.

It seems like a funny design flaw, but it again demonstrates the major diversity issue in tech. It may be unintentional to exclude people with darker skin tones from having clean hands, but it’s because a company full of white people forgot to test their product on dark skin, and it’s not just them.

The less diversity we have in tech, the more major design flaws we will see that disproportionately affect people of color.

So, can tech be racist or sexist?

Yeah, definitely.

Remember when Microsoft created “Tay,” an innocent AI bot that was programmed to engage different audiences in fun, friendly conversation on Twitter? In less than a day, Tay went from claiming “Humans are cool” to “Hitler was right I hate the Jews.” When it comes to chatbots, there is one company that has worked to create a “genderless voice assistant,” aptly named GenderLess Voice. Their product is called Q and its mission is to “break the mindset, where female voice is generally preferred for assistive tasks and male voice for commanding tasks.” According to their site, Q was a product of close collaboration between Copenhagen Pride, Virtue, Equal AI, Koalition Interactive & thirtysoundsgood.

While gender is not the only bias we need to eradicate in tech, this type of assistant is a great step forward. It’s one way to eliminate those stereotypes and biases that stem from more than 70 years ago and yet are unfortunately still espoused by many today.

We create technology. Programmers, engineers, designers, etc. develop tech with their biases in mind- you can’t avoid it. But, those biases can increase bigotry, hatred, and acts of violence. If we don’t take specific and purposeful steps towards creating companies and technology that foster diversity - in all of its forms - as well as emotional intelligence, we will never be able to progress as a fully tech-integrated society with truly helpful and ubiquitous technology.

FWIW, I hope to see Q adopted by big tech and more gender-inclusive, diverse, and empathetic technology developed in the future.

This article was originally published on a Squarespace domain on 11/30/23. Comments from that domain have been lost.